Transform LLMs in 13 Lines of Code with Defined.ai’s Data Annotation API

As signaled by the rapid popularity of ChatGPT, it is only a matter of time before large language models (LLMs) find their way into every facet of our lives. In order to remain competitive and meet the demands of modern business, companies will need to adapt by either integrating with third-party models or building them themselves. Those who choose the latter route will need much more than just a large dataset to train on.

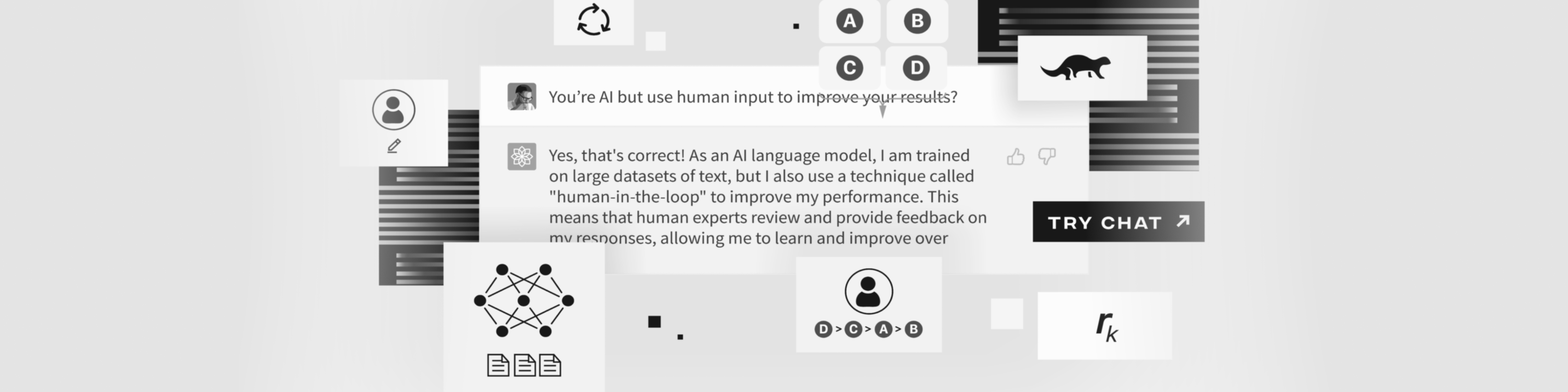

Human-in-the-loop annotations

Human-in-the-loop (HITL) annotations are essential for creating high-quality Large Language Models (LLMs) because they help refine and improve the accuracy of machine-generated outputs. Human-In-The-Loop (HITL) annotations involve having human annotators review and correct the machine-generated outputs, which are then used to train and improve the underlying models. This process ensures robustness to generate text that is relevant, accurate, and natural sounding.

Incorporating Human-in-the-loop (HITL) annotations into the training process is critical. Obtaining an ethically sourcing large volumes of annotations, however, is rife with risks and challenges (as seen with OpenAi’s work with the Labeling company Sama). Defined.ai offers an intelligent data infrastructure that can provide quality Human-In-The-Loop (HITL) annotations at better quality and speed. This is done through a programmable REST API that can be directly integrated into your data and training processes. Best of all, this can all be achieved with minimal efforts by your technical teams in as little as 13 lines of code!

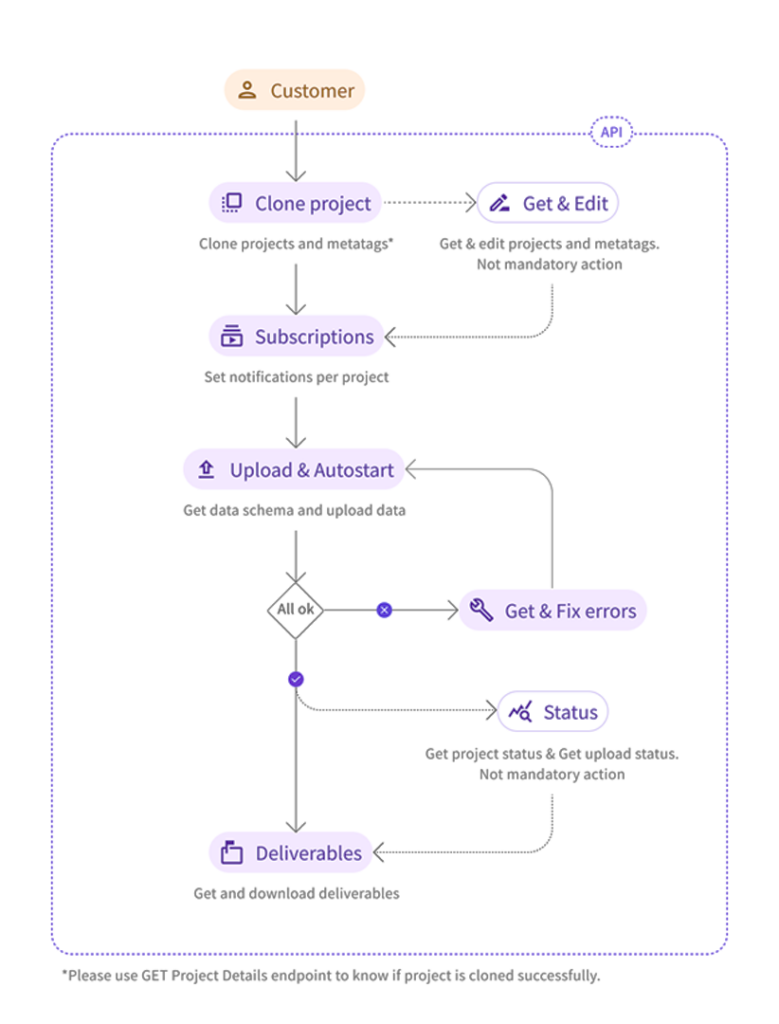

Guide to use the Defined.ai API:

Managing crowdsourced data annotation jobs

for Large Language Models (LLMs)

Getting Started

After acquiring an enterprise account from Defined.ai (reach out today, they are free!), using the API is as simple as a few REST calls. With the help of your customer success team, you will configure the project templates you will clone every time you want to launch a new annotation project. We will configure for things like what languages and locales you want to job to run in or how many annotators you want “judging” each sample. You will also be able to work with your customer success team to set up the interface that your annotators will see, as well as the instructions they will read before beginning the annotation work.

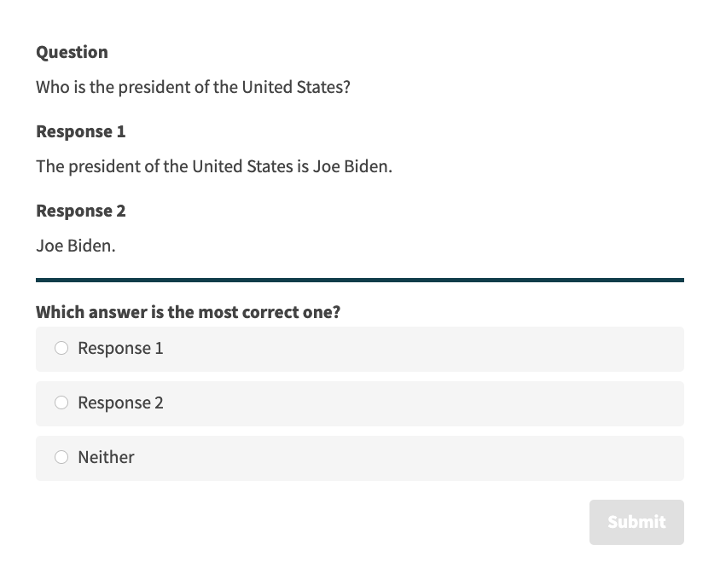

Here is an example of the job UI for a Human-In-The-Loop (HITL) job for “naturalness”:

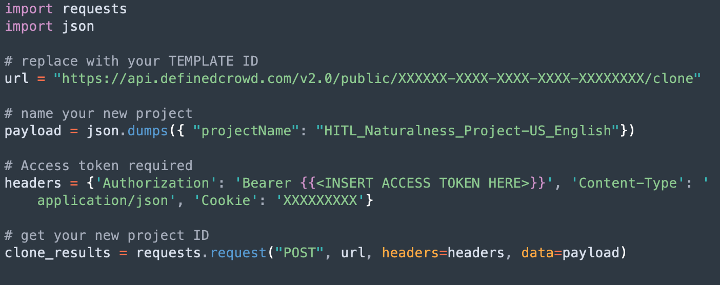

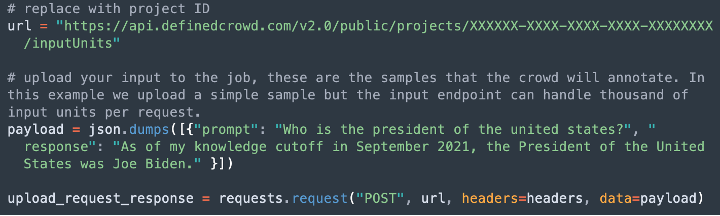

Launching a Project

Once the project template is created, you can clone it using its “template id” and a simple POST request to the clone endpoint. In this walkthrough, we will simulate creating a project for judging a project for its “naturalness”. The project will be for English-speaking annotators located in the United States.

After you successfully clone the template, you will be given the ID of the newly created project. This is the project you will upload your samples to and download results from. Using your new project ID, use the upload endpoint to submit your “input units” to the job.

Upon successful upload, the project will be ready to start. The samples you have uploaded will be sent to the crowd of annotators for judgment. The turnaround of these jobs depends on factors such as number of samples, annotators per sample, and locale of annotators.

The Defined.ai API workflow prides itself on exemplary speed without sacrificing accuracy for our quality. In fact, for locales like US English, Defined.ai can achieve over 75,000 judgments within 24 hours.

Downloading Your Results

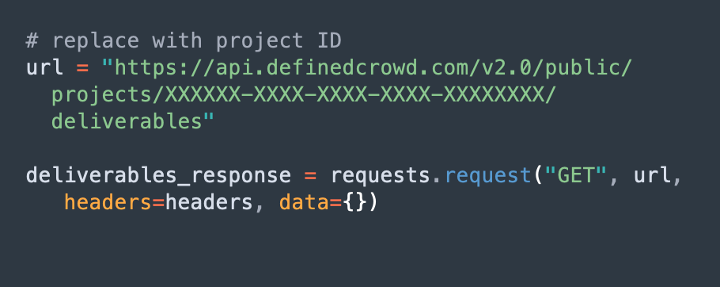

The delivery files will be made available via the delivery endpoints upon completion. There are multiple delivery files that describe things like the metadata of the crowd members themselves and the actual judgment results. To download the file that we want, the judgment results, we must follow a two-step process.

First, you must query your project ID to see what delivery files are available. We do this with the get deliverables endpoint:

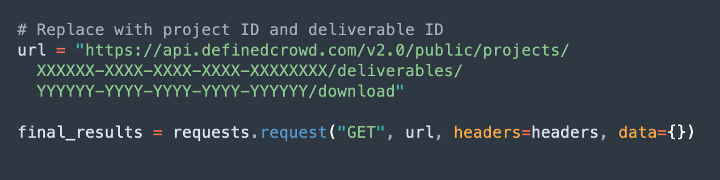

From there, if the project is complete, you will see a list of deliverables, you must grab the “deliverable ID” of the file you wish to download. Afterwards, you can download the file from the get deliverable download endpoint.

Review Results

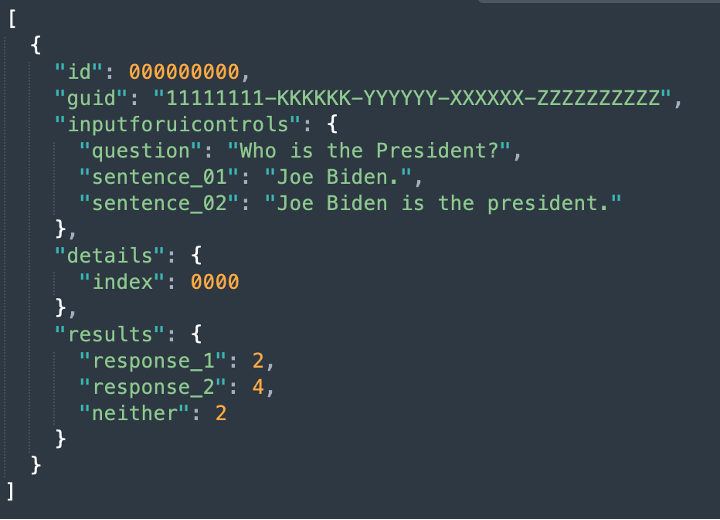

And there you have it. Thirteen lines of code that demonstrate the key steps for launching and delivering Human-In-The-Loop (HITL) annotations for large language models. Here is a sample of a real project conducted for a customer in 2022, judging “preference” between responses to a prompt from a model.

This was only the “MVP” functionality offered by the Defined.ai API. Refer to the documentation to learn about getting automated notifications, editing job configurations, and much more.

APIs for the Future

As large language models become more prevalent in our daily lives, the demand for high-quality, accurate data to train them also increases. Human-In-The-Loop (HITL) annotation is a key aspect of this process, but it can be time-consuming and costly to undertake without the right tools. Using the Defined.ai API for Human-In-The-Loop (HITL) annotation not only speeds up the process but also ensures that the data is of consistently high quality, enabling language models to be trained to perform at their best. In today’s fast-paced world, speed and accuracy are paramount, and leveraging the power of an API solution is an essential step toward staying competitive.

Learn more about the Defined.ai API today, contact us here.

(Ok, yes, in reality, a few more lines of code, like getting the authentication token, would be needed to make this flow function, so it’s a little more than thirteen. But hey, I could have skipped a few lines myself, but I decided to give you nice variables for the sake of readability. Let’s agree to disagree and just say it’s all together not too much code.)